Very similar to Perfect labels via prompting, they are both for the effect of classification through prompting.

OP finds that leaving vs. not leaving a space at the end of a prompt matters a lot, not leaving a space being the better option (thinking about logits, this kinda makes sense).

They also find that adding (Positive/Negative Only) to the end of the prompt helps with labeling accuracy. They test how many labels the LLM got correct for each type of prompt, and recommends the best one for everyone.

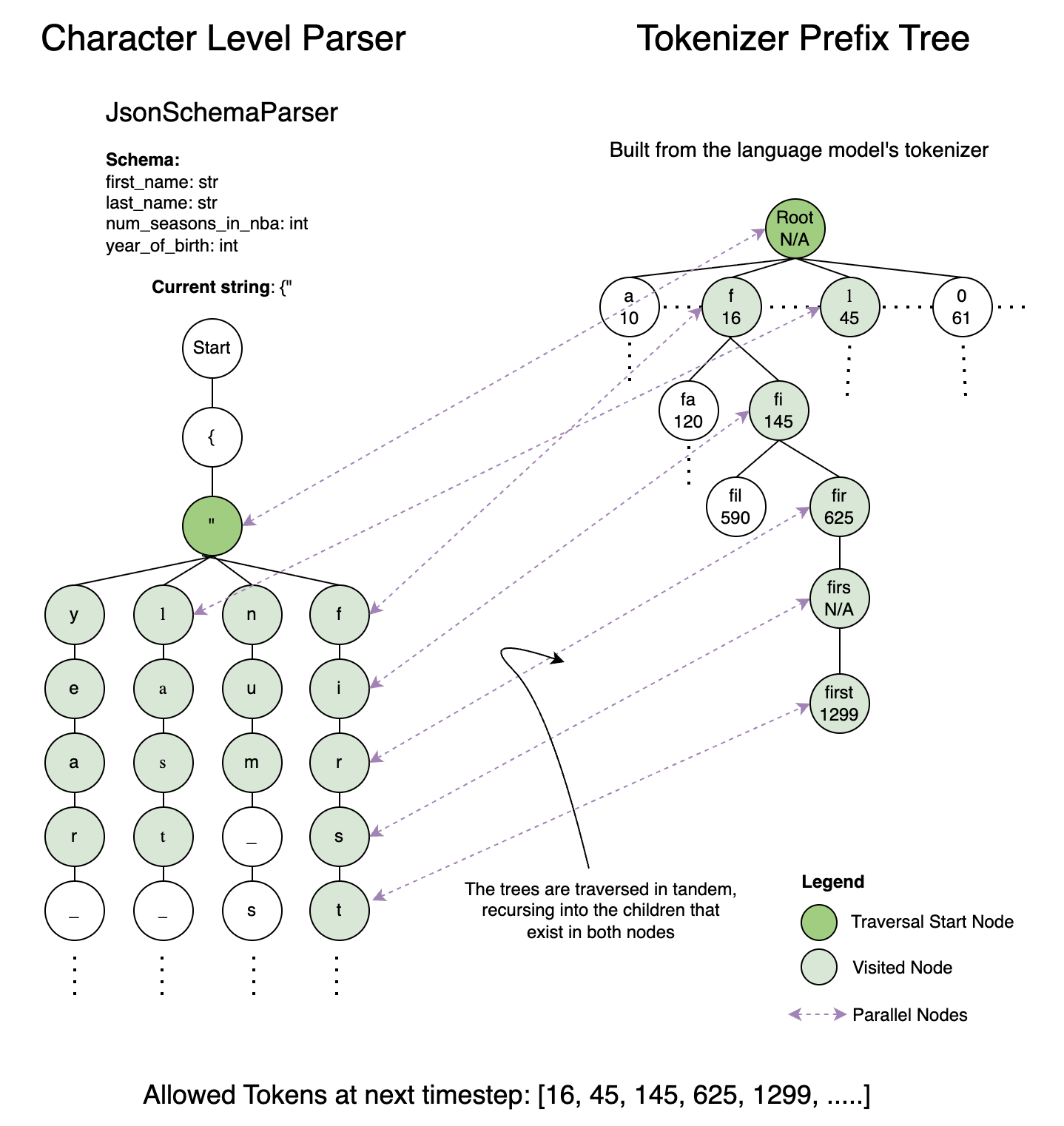

Notably OP asks for feedback, and one of the comments are about whether it would be better to constrain the model output by defining and forcing a grammar. The OP explores the options, and finds an approach that enforces a format without restricting the model’s responses. In the more specific case of following a JSON schema like:

first_name: str

last_name: str

num_seasons_in_nba: intA “character level parser” enforces what is an allowed token at each timestep, but also makes it a tree such that it can correctly map to what the tokenizer is producing at any timestep:

This ensures that the LLM can “choose” the order of the JSON elements “it wants”.

With the context above…

Some commenters bring up the “Guidance” tool, which enforces an exact output format. The OP tries this, and concludes how easy it makes classification tasks using LLMs. However, a later chain of comments discuss that tools that enforce outputs in an exact way may not allow the model to generate in the order and fashion it “wants to” generate. They discuss other tools that do allow more flexibility to the model, and then write that they are more encouraged that better prompting is a more fundamental solution to labeling performance of LLMs. “feels good to know my work in trying to get the model to output the label first isn’t a waste of time haha”

This is an interesting instance of when multiple streams of feedback matters, because if the OP were to simply go with the first recommendation without exploring what it does and how it affects outputs, they would have deemed their approach and effort trivial.