Abstract

AI-infused systems have demonstrated remarkable capabilities in addressing diverse human needs within online communities. Their widespread adoption has shaped user experiences and community dynamics at scale. However, designing such systems requires a clear understanding of user needs, careful design decisions, and robust evaluation. While research on AI-infused systems for online communities has flourished in recent years, a comprehensive synthesis of this space remains absent. In this work, we present a systematic review of 77 studies, analyzing the systems they propose through three lenses: the challenges they aim to address, their design functionalities, and the evaluation strategies employed. The first two dimensions are organized around four core aspects of community participation: contribution, consumption, mediation, and moderation. Our analysis identifies common design and evaluation patterns, distills key design considerations, and highlights opportunities for future research on AI-infused systems in online communities.

- How have AI-infused systems been designed to address challenges in online communities?

- Contribution support

- Consumption support

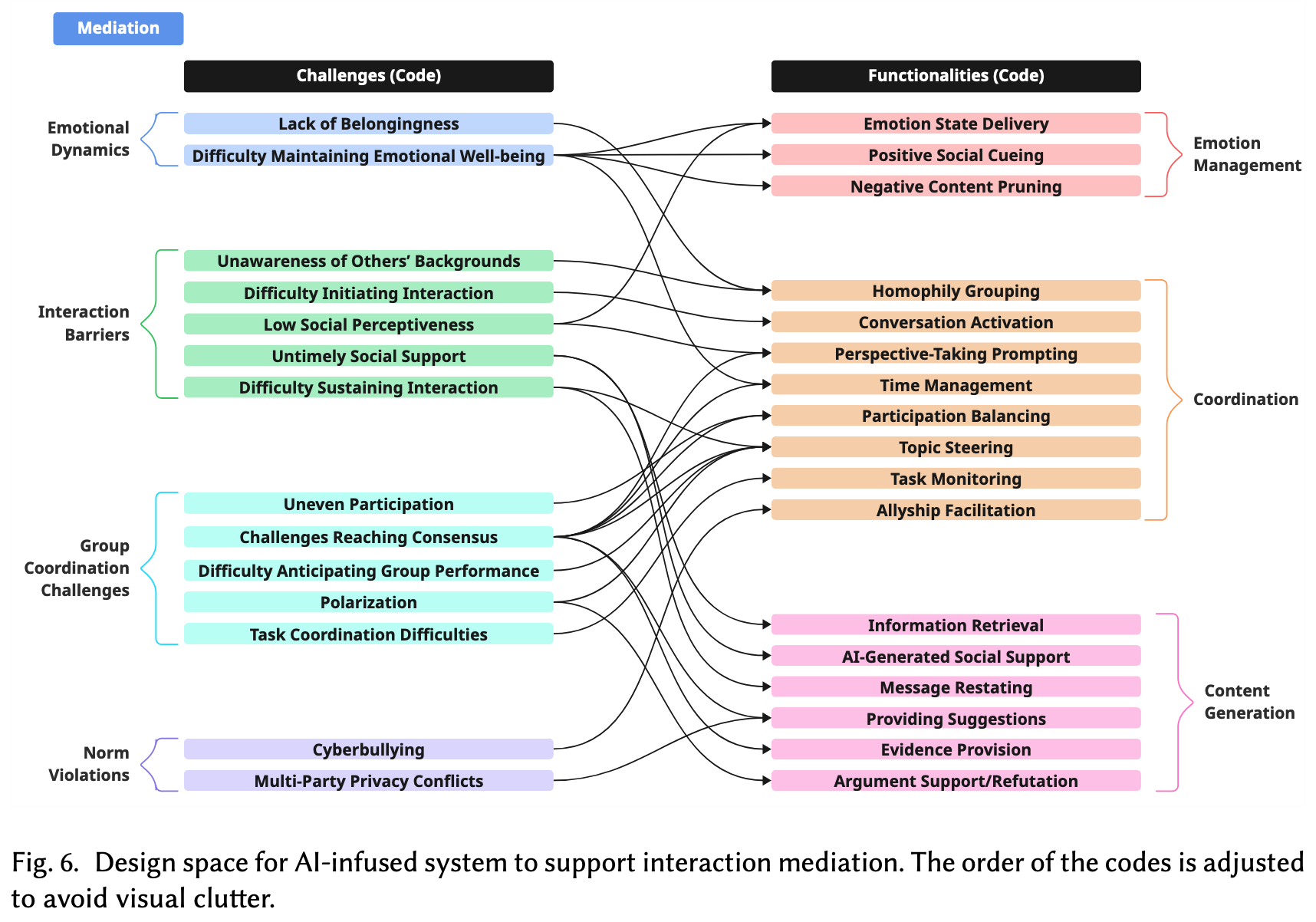

- Interaction mediation

- Moderation support

- How have existing studies evaluated the impact of AI-infused systems in online communities?

- Observation

- Questionnaire

- Interview

Some citations that I think might be useful:

- Motivational support

- “delivery of encouragement messages [26, 30, 55, 68, 81, 91, 139], incorporating praise or invitations to contribute—targeted either at individuals or entire groups.” (Zhang et al., 2025, p. 8)

- Ability support

- “writing recommendation and guidance [16, 76, 81, 86, 99, 121, 122], where the system generates or retrieves relevant content to address writing challenges, such as locating contribution angles and organizing thoughts.” (Zhang et al., 2025, p. 9)

- Critical thinking support

- “facilitating exposure to diverse perspectives [35, 42, 61, 131, 152]” (Zhang et al., 2025, p. 11)

- Coordination

- “prompt stakeholders to engage in perspective-taking [124] by considering diverse viewpoints within the group.” (Zhang et al., 2025, p. 13)

- “steer the direction [10, 30, 40, 42, 45, 55, 63, 76, 91, 109, 121, 123] of discussions to keep collaboration focused and aligned with collective goals such as reaching consensus” (Zhang et al., 2025, p. 13-14)

- Content Generation

- “restating a member’s comment [30] or explicitly supporting or contesting others’ viewpoints [19, 44, 68].” (Zhang et al., 2025, p. 14)

- “retrieve contextually relevant information [7–9, 25, 41, 49] to advance collaborative tasks” (Zhang et al., 2025, p. 14)

- “attaching relevant evidence or sources [41, 42, 49] to support their outputs.” (Zhang et al., 2025, p. 14)

- Automated decision support

- “content flagging [15, 48, 50, 56, 70, 108], where systems either calculate indicative metrics for moderator review or directly perform text classification.” (Zhang et al., 2025, p. 16)

We should select evals based on this?

We should select evals based on this?