Abstract

Limited large-scale evaluations exist for facilitation strategies of online discussions due to significant costs associated with human involvement. An effective solution is synthetic discussion simulations using Large Language Models (LLMs) to create initial pilot experiments. We propose design principles based on existing methodologies for synthetic discussion generation. Based on these principles, we propose a simple, generalizable, LLM-driven methodology to prototype the development of LLM facilitators by generating synthetic data without human involvement, and which surpasses current baselines. We use our methodology to test whether current Social Science strategies for facilitation can improve the performance of LLM facilitators. We find that, while LLM facilitators significantly improve synthetic discussions, there is no evidence that the application of these strategies leads to further improvements in discussion quality. In an effort to aid research in the field of facilitation, we release a large, publicly available dataset containing LLM-generated and LLM-annotated discussions using multiple open-source models. This dataset can be used for LLM facilitator finetuning as well as behavioral analysis of current out-of-the-box LLMs in the task. We also release an open-source python framework that efficiently implements our methodology at great scale.

“Facilitation” used as a synonym for mediation.

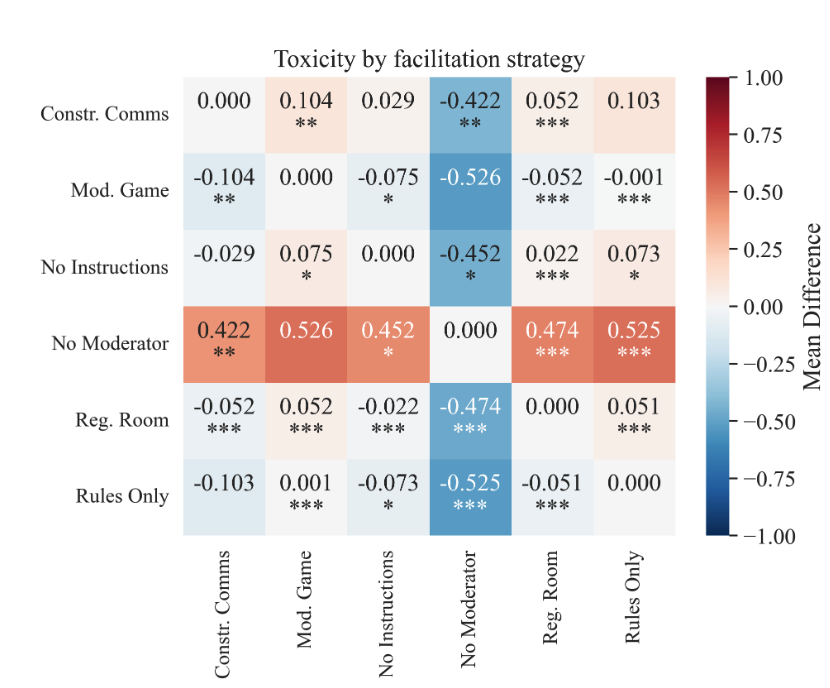

They find that LLM facilitators improve synthetic discussions, but they do not have evidence that the strategies lead to improvements in discussion quality.

They test 6 different facilitation strategies:

- No moderator

- No instructions: LLM provided with basic instructions like “You are a moderator, keep the discussion civil.”

- Rules only: “Be fair and impartial, assist users, don’t spread misinformation”. Derived from LLM alignment guidelines.

- Regulation room: inspired by the Regulation Room platform: “Stick to a maximum of two questions, use simple and clear language, deal with off-topic comments.”

- Constructive communications: inspired by the MIT Center for Constructive Communications. “Do not make decisions, be a guide, provide explanations.”

- Moderation game: “User is toxic: -5 points, User corrects behavior: +10 points.”

Again, I don’t like this because it’s all LLMs. LLM does the commenting, LLM does the annotating.