Abstract

Traditional AI-assisted decision-making systems often provide fixed recommendations that users must either accept or reject entirely, limiting meaningful interaction—especially in cases of disagreement. To address this, we introduce Human-AI Deliberation, an approach inspired by human deliberation theories that enables dimension-level opinion elicitation, iterative decision updates, and structured discussions between humans and AI. At the core of this approach is Deliberative AI, an assistant powered by large language models (LLMs) that facilitates flexible, conversational interactions and precise information exchange with domain-specific models. Through a mixed-methods user study, we found that Deliberative AI outperforms traditional explainable AI (XAI) systems by fostering appropriate human reliance and improving task performance. By analyzing participant perceptions, user experience, and open-ended feedback, we highlight key findings, discuss potential concerns, and explore the broader applicability of this approach for future AI-assisted decision-making systems.

Notes:

- Interaction is between human and AI, not multiple humans and multiple AIs, meaning this isn’t scaled.

- Think about the problem space of decision-making and if that’s the direction I want to pursue.

They define deliberation as “thoughtful and reasoned discussion” that facilitates “constructive discourse and consensus-building” through individuals “rigorously evaluating different perspectives” and letting participants “refine their viewpoints through informed discussions about opinion discrepancies”.

They use this definition to address two challenges they see with AI-assisted decision-making—triggering people’s analytical thinking when presented with AI’s suggestions, and reaching consensus in a bidirectional manner between the AI and the human.

They define their problem space of decision-making into three parts:

- Decision: overall choice to be made

- Dimension: attributes that affect decision making

- Opinion: assessment of a dimension’s impact on the overall decision

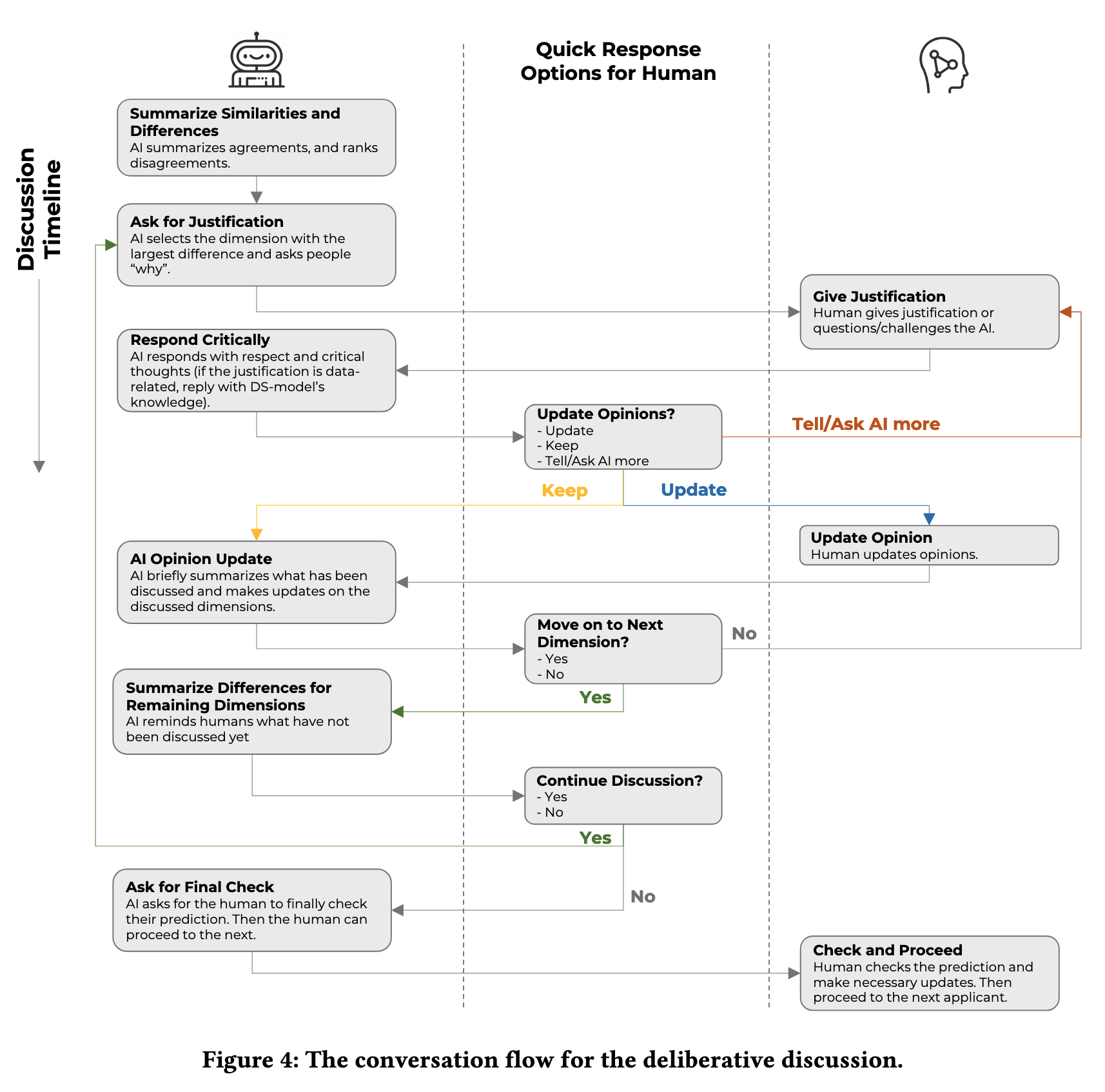

Then they employed an LLM that helps at the dimension level, focusing on how each dimension supports or opposes a decision. They require that both the AI and the human to substantiate their opinions with evidence, and assess its credibility and probative value (opinion, or Weight of Evidence). Steps:

- Both human and AI is elicited to produce thoughts

- Human and AI’s viewpoints are processed and differences are presented

- Human and AI discuss—substantiating their opinions, clarify evidence, and explain weight assignments

- Lastly, they are given the chance to “update their thoughts”

There is more to the design and it is very beautiful, but since our problem spaces are different and my time is limited I’m not going into detail.

They compare their “Deliberative AI” tool to traditional xAI tools to see whether it would increase task performance in a graduate admissions context where people consider dimensions to predict an applicant’s chance of getting an offer based on their profile. They found that it outperforms traditional explainable AI systems by fostering appropriate human reliance and improving task performance.

They conclude that their system is well suited for decision-making tasks where the decision quality outweighs need for speed or convenience, as they found that 31% of the conversations involve participants questioning the AI’s logic. They also write that addressing conflicts is more beneficial than just seeking consensus, as they serve as a lens to uncover underlying flaws and biases.

More notably, they write that Humans and AI think differently, as humans use heuristics and logic, while AI relies on data. They suggest that future designs should align with human intuition and cognitive processes.